Dev Log #1

Hello and welcome to our inaugural Development Log, the first in a series of regular posts where we’ll share what we are doing, our thoughts on technology, and a few links and projects that have sparked our curiosity.

The summer passed by with travels, kicking off new exciting projects, and office upgrades - the highlight being our new Håg Capisco Chairs ↗ , which have changed our lives (yes, really). Here’s a few things we’ve been working on:

- Data & Human Rights in South Korea

- SQL databases directly in the browser

- Pushing the limits of OpenLayers

- Doubles for timestamp values

- List of Links

Data & Human Rights in South Korea

As part of our work with Security Force Monitor (SFM), we were invited to present at an event in Seoul: Combating North Korea's Crime Of Enforced Disappearance, expertly organized by Transitional Justice Working Group (TJWG). We were thrilled to showcase our work with SFM on Myanmar diving deep into discussions on human rights data models, preserving testimonies, linking various data points and relationships together to get a bigger picture, using graph analysis to understand command structures.

The work that TJWG does is incredibly important. They are keeping data at an extremely high fidelity, and using modern digital archival techniques.

const create = (dump) =>

initSqlJs({

// Required to load the wasm binary asynchronously.

locateFile: () => wasmUrl

})

.then((SQL) => {

return new SQL.Database();

})

.then((db) => {

console.time('adding statements to initial database');

db.exec(dump);

console.timeEnd('adding statements to initial database');

return db;

});

const searchCompany = (term, db) => {

const sql = 'SELECT * from companies WHERE name LIKE '%:term%''

const stmt = db.prepare(sql);

stmt.bind([term])

let res = [];

while (stmt.step()) {

var row = stmt.getAsObject();

res.push(row);

}

return res;

};

import dump from "@/data/latest.sql"

create(dump).then(db => searchCompany("Microsof", db))

Databases In The Browser •͡˘㇁•͡˘

We recently became very excited about running an embeddable database directly in the browser after seeing the conference Have You Tried Rubbing A Database On It?, specifically Nikita's talk “Your frontend needs a database” and Nicholas’s talk about reactivity in SQL that explores how an application's components can update whenever the embedded database changes.

This month, we’ve been experimenting with sql.js - which we ran with a 17MB database (gzipped down to 2.9MB), imported from a db dump file. With just a few minor tweaks, it handles type-ahead search really well. In today's world, that’s an acceptable transfer size that allows instantaneous search.

This is exciting to us because we can now use the same kind of model we used to publish Under Who’s Command for Security Force Monitor. In that project, we used a datascript database generated from versions of SFM data - separating the researcher, mutable, database from the published, frozen one.

export const sourcesOf = (entityId) =>

pipe(

() => [

`{:find [[(pull ?s [* {:source/publication:ref [*]}]) ...]]

:where [[?e :entity/id #uuid"${entityId}"]

[?e :claim/citation:refs ?c]

[?c :citation/source:ref ?s]]}`,

],

db.query,

map(entities.transformKeys)

)()

The application runs as a static site, with the database shipped as a static resource. Read the case study

This approach speeds up development time by cutting the need for APIs and server calls, replacing it with front-end queries on a static page. Hosting and maintenance become easier, and whenever you need to update the data, you can simply export it and rebuild the site. The website has been running for almost a year now with no hosting or update requirements.

In the future, it would be very interesting to be able to do this again in SQL, as so many more people know and use the language. Although, we will miss the beauty and composability of datalog queries:

{:find [[(pull ?s [* {:source/publication:ref [*]}]) ...]]

:in [$ ?id]

:where [[?e :entity/id ?id]

[?e :claim/citation:refs ?c]

[?c :citation/source:ref ?s]]}

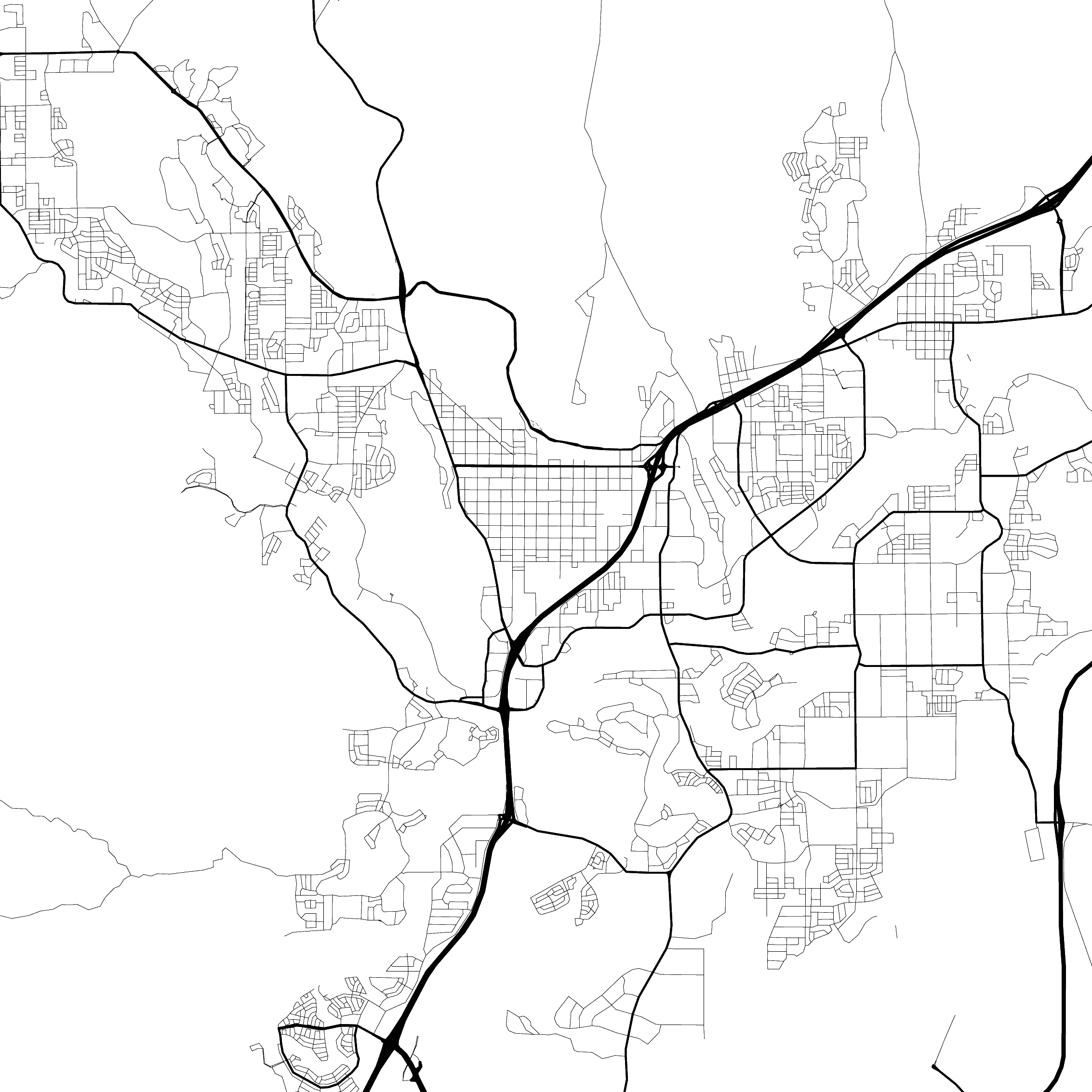

Pushing the limits of OpenLayers

One of our new projects involves showing multiple areas of a GeoTiff simultaneously. So, just how many openlayers maps can we display side by side? The answer is: It depends!

With a shared data source, we think it’s possible to push this pretty far. But there’s a catch: GeoTiff layers render with WebGL, not with tiles, limiting us to a maximum of 16 WebGL contexts per page. We’re excited to see how much further we can push the limits of OpenLayers.

const s = new OSM();

const view = new View({

center: [-6655.5402445057125, 6709968.258934638],

zoom: 13,

});

const times = new Array(50).fill(1);

// render 50 maps

times.map((x, index) => {

const l = new TileLayer({

source: s,

});

new Map({

target: `map-${index}`,

layers: [l],

view: view,

});

});

This works for displaying many maps that just use tiles, but for GeoTiff the browser starts falling apart at 16.

(let [db (d/create-database "datomic:mem://test")

conn (d/connect "datomic:mem://test")]

;; transact the schema. attribute :num is a double

@(d/transact conn [{:db/ident :num

:db/valueType :db.type/double

:db/cardinality :db.cardinality/one}])

;; add a few entities with different :nums

@(d/transact conn [[:db/add -1 :num ##Inf]

[:db/add -2 :num (double (u/now))]

[:db/add -3 :num 233.0]])

;; query that db

(d/q '{:find [(pull ?e [*])]

:where [[?e :num ?n]

[(> ?n 999)]]}

(d/db conn)))

Using doubles for timestamp values

Our command chain project at SFM requires us to use number values to save timestamps in our Datomic Database including unique concepts like “from the beginning of time” or “until the end of time” for dealing with nonspecific time ranges and querying within those.

Before, we were using long to do so. Changing the system to use Doubles allows us to compare against infinity, and also included some serious performance gains. Running time-based graph traversal on ~90,000 paths returns information in under 30 seconds.

What we are excited about

PostGIS in Action: this book has been the best formalization of our PostGIS knowledge to date.

The fashion statement that is this bell laboratories video

The recent Clojure Meetup in Berlin, and the two fantastic talks, about clj-reload and using clojure-dart to develop apps